AI-Powered Data Extraction from Scientific Papers: Technical Guide

Table of Contents

Introduction

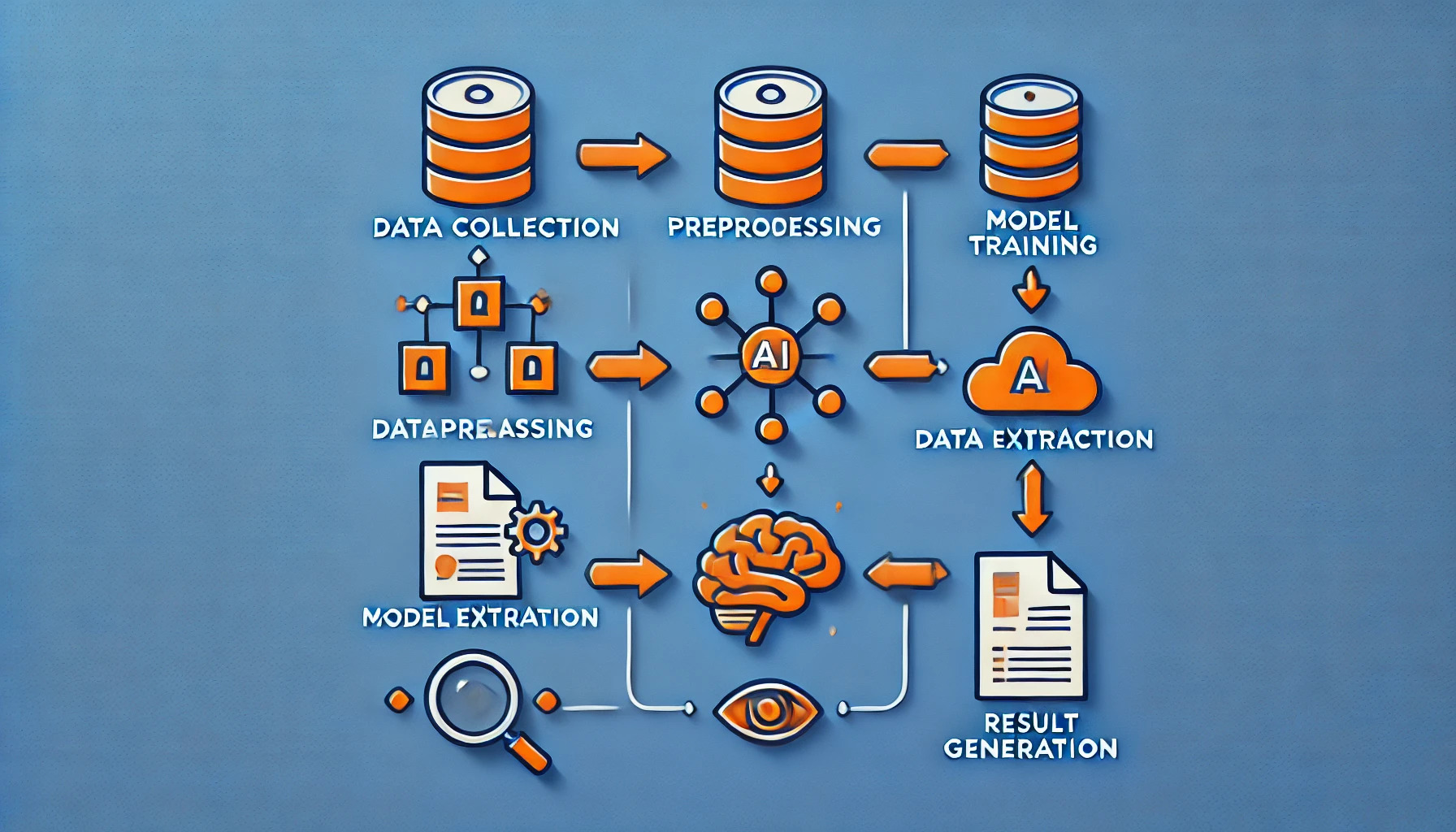

Extracting structured data from scientific papers is a complex task that has been significantly improved by the application of artificial intelligence. This technical guide will walk you through the process of developing an AI-powered system for extracting data from scientific literature, focusing on the underlying principles and techniques.

Before we dive in, it’s worth noting that there are existing platforms that leverage AI for scientific literature search and analysis. For instance, Semantic Scholar uses AI to help researchers discover and understand scientific literature more efficiently. While our guide focuses on building your own system, these platforms can be valuable resources for understanding state-of-the-art approaches in AI-powered literature analysis.

1. Data Preparation

The first step in any AI-based extraction system is preparing your data:

1.1. Document Conversion: Convert all papers to a consistent format (e.g., plain text or XML) that can be easily processed by AI algorithms.

1.2. Text Normalization: Apply techniques such as lowercasing, removing special characters, and handling ligatures to standardize the text.

1.3. Tokenization: Break the text into individual tokens (words, punctuation, etc.) to facilitate further processing.

1.4. Sentence Segmentation: Identify and delineate individual sentences within the text.

When working with biomedical literature, you might want to source your papers from specialized databases like PubMed. PubMed provides access to a vast collection of biomedical literature, which can be an excellent source for training and testing your AI extraction system.

Here’s a Python code snippet demonstrating basic text normalization and tokenization:

import re

from nltk.tokenize import word_tokenize, sent_tokenize

def normalize_and_tokenize(text):

# Convert to lowercase and remove special characters

text = re.sub(r'[^a-zA-Z0-9\\\\s]', '', text.lower())

# Tokenize into sentences

sentences = sent_tokenize(text)

# Tokenize each sentence into words

tokenized_sentences = [word_tokenize(sentence) for sentence in sentences]

return tokenized_sentences

# Example usage

raw_text = "This is a sample text. It contains two sentences."

processed_text = normalize_and_tokenize(raw_text)

print(processed_text)

2. Model Selection and Training

Choosing the right AI model is crucial for effective data extraction:

2.1. Model Architecture: Select an appropriate architecture based on your extraction needs. Common choices include:

- Recurrent Neural Networks (RNNs) for sequence modeling

- Convolutional Neural Networks (CNNs) for layout analysis

- Transformer models for contextual understanding

2.2. Transfer Learning: Leverage pre-trained models and fine-tune them on your specific scientific domain to improve performance and reduce training time.

2.3. Training Data Preparation: Create a labeled dataset of scientific papers with the information you want to extract clearly marked.

2.4. Model Training: Train your chosen model on the prepared dataset, using techniques like cross-validation to ensure robustness.

If you’re new to AI research assistance, you might find it helpful to check out this guide on best AI research assistant. It provides valuable insights into how AI can support various aspects of the research process, including data extraction.

Here’s a simplified example of how you might define a basic RNN model for sequence labeling using PyTorch:

import torch

import torch.nn as nn

class SimpleRNN(nn.Module):

def __init__(self, input_size, hidden_size, output_size):

super(SimpleRNN, self).__init__()

self.hidden_size = hidden_size

self.rnn = nn.RNN(input_size, hidden_size, batch_first=True)

self.fc = nn.Linear(hidden_size, output_size)

def forward(self, x):

_, hidden = self.rnn(x)

output = self.fc(hidden.squeeze(0))

return output

# Example usage

input_size = 100 # Size of word embeddings

hidden_size = 128

output_size = 5 # Number of label classes

model = SimpleRNN(input_size, hidden_size, output_size)

3. Feature Engineering

Effective feature engineering can significantly improve the performance of your extraction model:

3.1. Text Features: Extract features such as:

- TF-IDF scores

- N-grams

- Part-of-speech tags

- Named entity recognition results

3.2. Structural Features: Capture the document’s structure with features like:

- Section headings

- Paragraph positions

- List and table identifiers

3.3. Semantic Features: Incorporate domain-specific knowledge:

- Scientific terminology

- Citation patterns

- Equation detection

When working with scientific literature, it’s worth exploring how platforms like Semantic Scholar handle feature engineering. Their approach to understanding and categorizing scientific text can provide valuable insights for your own feature engineering process.

Here’s a Python snippet demonstrating basic TF-IDF feature extraction:

from sklearn.feature_extraction.text import TfidfVectorizer

def extract_tfidf_features(documents):

vectorizer = TfidfVectorizer(max_features=1000)

tfidf_matrix = vectorizer.fit_transform(documents)

feature_names = vectorizer.get_feature_names_out()

return tfidf_matrix, feature_names

# Example usage

documents = [

"This is the first document.",

"This document is the second document.",

"And this is the third one.",

]

tfidf_matrix, feature_names = extract_tfidf_features(documents)

print(f"TF-IDF matrix shape: {tfidf_matrix.shape}")

print(f"Feature names: {feature_names[:10]}")

4. Extraction Pipeline Development

Develop a robust pipeline to process papers and extract the desired information:

4.1. Preprocessing: Apply the data preparation steps to incoming papers.

4.2. Section Identification: Use your trained model to identify and label different sections of the paper (e.g., abstract, methods, results).

4.3. Entity Extraction: Extract specific entities such as:

- Numerical values and units

- Chemical compounds

- Gene names

- Statistical results

4.4. Relationship Extraction: Identify relationships between extracted entities, such as cause-effect relationships or comparisons.

4.5. Table and Figure Extraction: Implement computer vision techniques to identify and extract data from tables and figures.

When developing your extraction pipeline, consider how it might integrate with existing research tools. For example, you could design your system to complement the search capabilities of PubMed, allowing researchers to not only find relevant papers but also automatically extract key data from them.

Here’s a basic example of how you might structure an extraction pipeline:

class ExtractionPipeline:

def __init__(self, preprocessor, section_identifier, entity_extractor, relationship_extractor):

self.preprocessor = preprocessor

self.section_identifier = section_identifier

self.entity_extractor = entity_extractor

self.relationship_extractor = relationship_extractor

def process_paper(self, paper):

# Preprocess the paper

processed_text = self.preprocessor.process(paper)

# Identify sections

sections = self.section_identifier.identify(processed_text)

# Extract entities from each section

entities = {}

for section, text in sections.items():

entities[section] = self.entity_extractor.extract(text)

# Extract relationships between entities

relationships = self.relationship_extractor.extract(entities)

return {

'sections': sections,

'entities': entities,

'relationships': relationships

}

# Usage would involve implementing each component and then:

# pipeline = ExtractionPipeline(preprocessor, section_identifier, entity_extractor, relationship_extractor)

# results = pipeline.process_paper(paper_text)

5. Post-processing and Validation

After extraction, it’s crucial to validate and refine the results:

5.1. Data Cleaning: Remove any extraction artifacts or inconsistencies in the extracted data.

5.2. Confidence Scoring: Implement a system to score the confidence of each extracted piece of information.

5.3. Human-in-the-loop Validation: Develop an interface for human experts to review and correct the AI’s extractions.

5.4. Feedback Loop: Use validated results to continually improve your AI model through techniques like active learning.

For an example of how AI can assist in the research process, including data validation and analysis, check out this guide on AI research assistants. It provides insights into how AI can support various stages of research, from literature review to data analysis.

Here’s a simple example of how you might implement a confidence scoring system:

import numpy as np

def confidence_score(prediction, threshold=0.8):

# Assume prediction is a probability distribution over possible labels

max_prob = np.max(prediction)

if max_prob > threshold:

return max_prob

else:

return 0 # Below threshold, no confident prediction

# Example usage

predictions = np.array([0.1, 0.2, 0.7, 0.0])

score = confidence_score(predictions)

print(f"Confidence score: {score}")

Conclusion

Developing an AI-powered system for extracting data from scientific papers is a complex but rewarding endeavor. By following this technical guide, you’ll be well-equipped to tackle the challenges of data preparation, model development, feature engineering, pipeline creation, and result validation.

Remember that the field of AI is rapidly evolving, so stay current with the latest research and techniques. Platforms like Semantic Scholar and PubMed are constantly innovating in this space, and keeping an eye on their developments can provide valuable insights for your own work.

Happy extracting!

![AI-Powered Data Extraction from Scientific Papers: Technical Guide 2 steps of data preparation, from raw PDFs to tokenized and segmented text]](https://jsonviewer.ai/wp-content/uploads/2024/09/DALL·E-2024-09-18-13.51.05-A-clean-and-simplified-diagram-illustrating-the-steps-of-data-preparation-from-raw-PDFs-to-tokenized-and-segmented-text.-Keep-the-same-design-and-lay-1.jpg)